Deepseek VL is a state-of-the-art AI model that has revolutionized the field of multimodal understanding. This technology enables machines to comprehend and process various forms of data, including text, images, and audio.

By leveraging deep learning techniques, Deepseek VL can analyze complex patterns and relationships within these modalities, leading to more accurate and comprehensive understanding. This is achieved through the model's ability to learn from vast amounts of data and adapt to new information.

One of the key applications of Deepseek VL is in the field of natural language processing (NLP), where it can be used to improve language translation, sentiment analysis, and text summarization tasks.

What's New

DeepSeek-VL2's advanced attention mechanisms enable it to effectively process and integrate visual and textual information, allowing for a more nuanced understanding of the relationships between visual elements and textual descriptions.

Its multi-head cross-attention layers facilitate the exchange of information between the visual and language modalities, making it a game-changer in the field of AI.

The model's efficient self-attention patterns capture long-range dependencies within both visual and textual data, enhancing its ability to understand complex contexts and generate coherent responses.

This is particularly useful for tasks that require a deep understanding of the relationships between different pieces of information, such as image captioning or visual question answering.

DeepSeek-VL2's dynamic attention routing adaptively focuses on the most relevant features based on the task at hand, improving its efficiency and effectiveness across diverse applications.

This means that the model can adjust its attention on the fly to suit the specific task or problem it's trying to solve, making it a highly versatile tool.

The model's hierarchical feature pyramids enable it to process visual information at multiple scales, capturing both fine-grained details and high-level semantic concepts.

This allows DeepSeek-VL2 to maintain a comprehensive understanding of visual scenes, from individual objects to overall compositional structures.

Why to Care?

DeepSeek VL is a submersible robot designed to explore the ocean floor and recover objects from the seafloor. It can dive to a depth of over 4,000 meters.

Its advanced sensors and manipulator arms make it an incredibly useful tool for scientists and researchers. DeepSeek VL's ability to recover objects from the seafloor can help us learn more about the ocean's ecosystems and how they're affected by human activities.

The ocean is a vast and largely unexplored frontier, covering over 70% of our planet. By exploring it with robots like DeepSeek VL, we can gain a better understanding of its importance and how to protect it.

Model Overview

DeepSeek-VL is an open-source Vision-Language (VL) Model that can process a wide range of inputs, including logical diagrams, web pages, formula recognition, scientific literature, natural images, and embodied intelligence in complex scenarios.

The model is capable of understanding the relationships between images and text, generating text based on images, answering questions about images, and providing insights into complex scenarios. This is made possible by its hybrid vision encoder that combines the strengths of SigLIP-L and SAM-B.

DeepSeek-VL2 is designed to excel in various vision-language tasks, including visual question answering, optical character recognition, document understanding, table comprehension, chart interpretation, and visual grounding.

Here are the three variants of DeepSeek-VL2, each tailored to different computational requirements and use cases:

The model series achieves competitive or state-of-the-art performance with similar or fewer activated parameters compared to existing open-source dense and MoE-based models.

Specifications

DeepSeek VL models are designed to handle large amounts of data, with DeepSeek-VL-7b-base being trained on approximately 2T text tokens and 400B vision-language tokens.

The DeepSeek-VL-7b-base model uses SigLIP-L and SAM-B as the hybrid vision encoder, supporting 1024 x 1024 image input.

Here are the specifications of the DeepSeek VL models:

Model Specifications

The model specifications are quite impressive. DeepSeek-VL-7b-base uses SigLIP-L and SAM-B as the hybrid vision encoder, supporting 1024 x 1024 image input.

This model was trained on a massive amount of data, approximately 2T text tokens and 400B vision-language tokens.

DeepSeek-VL-7b-base is the foundation for a more specialized model, DeepSeek-VL-7b-chat, which is designed specifically for conversational applications.

Here's a breakdown of the two models:

Format

DeepSeek-VL uses a hybrid vision encoder, combining SigLIP-L and SAM-B, to support 1024 x 1024 image input. It's a powerful combination that enables the model to process high-resolution images.

The model is built on top of the DeepSeek-LLM-7b-base model, which was trained on approximately 2T text tokens. This extensive training dataset gives the model a broad understanding of language and allows it to make informed decisions.

Supported Data Formats

DeepSeek-VL can handle various types of data, but it's essential to know what it can and can't process.

One key limitation is the image size: DeepSeek-VL only supports 1024 x 1024 image input.

If you're working with text, you'll need to pre-process it using the VLChatProcessor class. This ensures that the text is in a format that DeepSeek-VL can understand.

Here are the supported data formats in a quick rundown:

- Images: 1024 x 1024

- Text: Requires pre-processing using VLChatProcessor

Performance

DeepSeek-VL is a powerhouse when it comes to performance, capable of processing a wide range of inputs including logical diagrams, web pages, and natural images. It can handle 1024 x 1024 image inputs quickly and efficiently.

Its performance is particularly noteworthy in visual question answering tasks, where it demonstrates its ability to comprehend complex visual scenes and provide accurate, contextually relevant responses to diverse queries. DeepSeek-VL has achieved state-of-the-art results in these tasks, showcasing its robust understanding of visual and linguistic concepts.

Here are some key performance highlights of DeepSeek-VL:

DeepSeek-VL has also shown exceptional performance in zero-shot learning scenarios, demonstrating its ability to generalize knowledge and apply it to novel tasks without specific training.

Accuracy

DeepSeek-VL boasts high accuracy in various tasks, including logical diagram understanding, web page analysis, formula recognition, scientific literature comprehension, natural image processing, and embodied intelligence in complex scenarios.

The model's hybrid vision encoder and multimodal understanding capabilities make it a top performer in these tasks. Its ability to process a wide range of inputs, such as logical diagrams, web pages, and natural images, is a testament to its accuracy.

DeepSeek-VL's accuracy is particularly noteworthy in tasks like logical diagram understanding and formula recognition, where it can accurately comprehend and analyze complex visual data.

Here are some of the tasks where DeepSeek-VL has demonstrated high accuracy:

- Logical diagram understanding

- Web page analysis

- Formula recognition

- Scientific literature comprehension

- Natural image processing

- Embodied intelligence in complex scenarios

Comparison with Other Models

DeepSeek-VL2 stands out in the field of vision-language models due to its efficient use of parameters through the MoE architecture, making its performance particularly impressive.

Its ability to achieve similar or better results across various benchmarks compared to models like GPT-4V and CLIP is a testament to its strength.

DeepSeek-VL2's balance of strong language understanding capabilities and visual tasks sets it apart from many other multimodal models, making it well-suited for applications requiring sophisticated reasoning about textual and visual information.

Chart Interpretation

DeepSeek-VL2 can analyze and describe charts and graphs, making it useful for business intelligence applications, automated report generation, and data visualization interpretation.

This feature is a game-changer for data-driven decision-making, allowing you to quickly understand complex data trends and patterns.

By automating the process of chart interpretation, DeepSeek-VL2 saves you time and effort, freeing you up to focus on higher-level tasks and strategy.

DeepSeek-VL2 is particularly useful for business intelligence applications, where it can help you extract valuable insights from large datasets and make data-driven decisions.

Quick Start

To get started with DeepSeek-VL, you'll need to install the necessary dependencies by running pip install -e. This will give you access to the tools you need to work with the model.

First, you'll need to load the model using the model_path variable. In the example, this is set to "deepseek-ai/deepseek-vl-7b-chat". Make sure to replace this with the actual path to your model.

Here's a step-by-step guide to get you started:

- Install the necessary dependencies by running pip install -e.

- Load the model using the model_path variable.

- Use the VLChatProcessor and MultiModalityCausalLM to process inputs and generate responses.

Image Input Limitations

DeepSeek-VL can process images up to 1024 x 1024 pixels.

The model may not perform well with low-quality or distorted images.

DeepSeek-VL might struggle with images that contain complex or abstract concepts.

Here are some key considerations when working with image input limitations:

- Image quality: The model may not perform well with low-quality or distorted images.

- Image complexity: DeepSeek-VL might struggle with images that contain complex or abstract concepts.

Implementation

To use DeepSeek-VL2 in your projects, you can leverage the Hugging Face Transformers library, which provides a convenient interface for working with the model.

You'll need to set up and use the model as shown in a basic example, which demonstrates the basic setup for using DeepSeek-VL2.

In a real-world application, you would need to handle image loading, tokenization, and model inference to generate responses based on the visual and textual inputs.

The Hugging Face Transformers library provides a convenient interface for working with DeepSeek-VL2, making it easier to integrate into your projects.

To generate responses, you'll need to perform image loading, tokenization, and model inference, which requires a more complex setup than the basic example.

Future Directions and Improvements

DeepSeek-VL2 is a powerful tool that's constantly evolving, and there are several areas where it's likely to see significant improvements. One potential direction is increasing the model size to push the boundaries of performance, while maintaining efficiency through advanced MoE techniques.

Larger model sizes could unlock new capabilities, but it's essential to balance size with efficiency to keep the model running smoothly. I've seen this trade-off in other AI models, where bigger isn't always better.

Developing more sophisticated fine-tuning techniques for specific domains or tasks could enhance the model's adaptability to specialized use cases. This could involve tweaking the model's parameters to work better with specific data or tasks.

Multi-modal fusion is another area with potential for growth, potentially incorporating audio or other sensory inputs alongside vision and language. This could open up new possibilities for applications like interactive visual dialogue systems.

Real-time processing is also an area of interest, with the goal of optimizing the model for live video analysis or other real-time applications. This could involve tweaking the model's architecture or using specialized hardware to speed up processing.

Addressing potential biases and ensuring responsible use of the technology is crucial, and will likely be an ongoing focus for the DeepSeek-VL2 community. This includes using the model in a way that's fair and respectful to all users.

You can also explore other AI tools to enhance your work with DeepSeek-VL2, such as AI Video Generation Models like Minimax, Runway Gen-3, and Luma AI with Anakin AI.

Sources

- https://newsletter.towardsai.net/p/tai-132-deepseek-v310x-improvement

- https://docs.vllm.ai/en/latest/models/supported_models.html

- https://dataloop.ai/library/model/deepseek-ai_deepseek-vl-7b-chat/

- https://sebastian-petrus.medium.com/deepseek-vl2-advancing-multimodal-understanding-with-mixture-of-experts-vision-language-models-6925e45f8609

- http://anakin.ai/blog/deepseek-vl2/

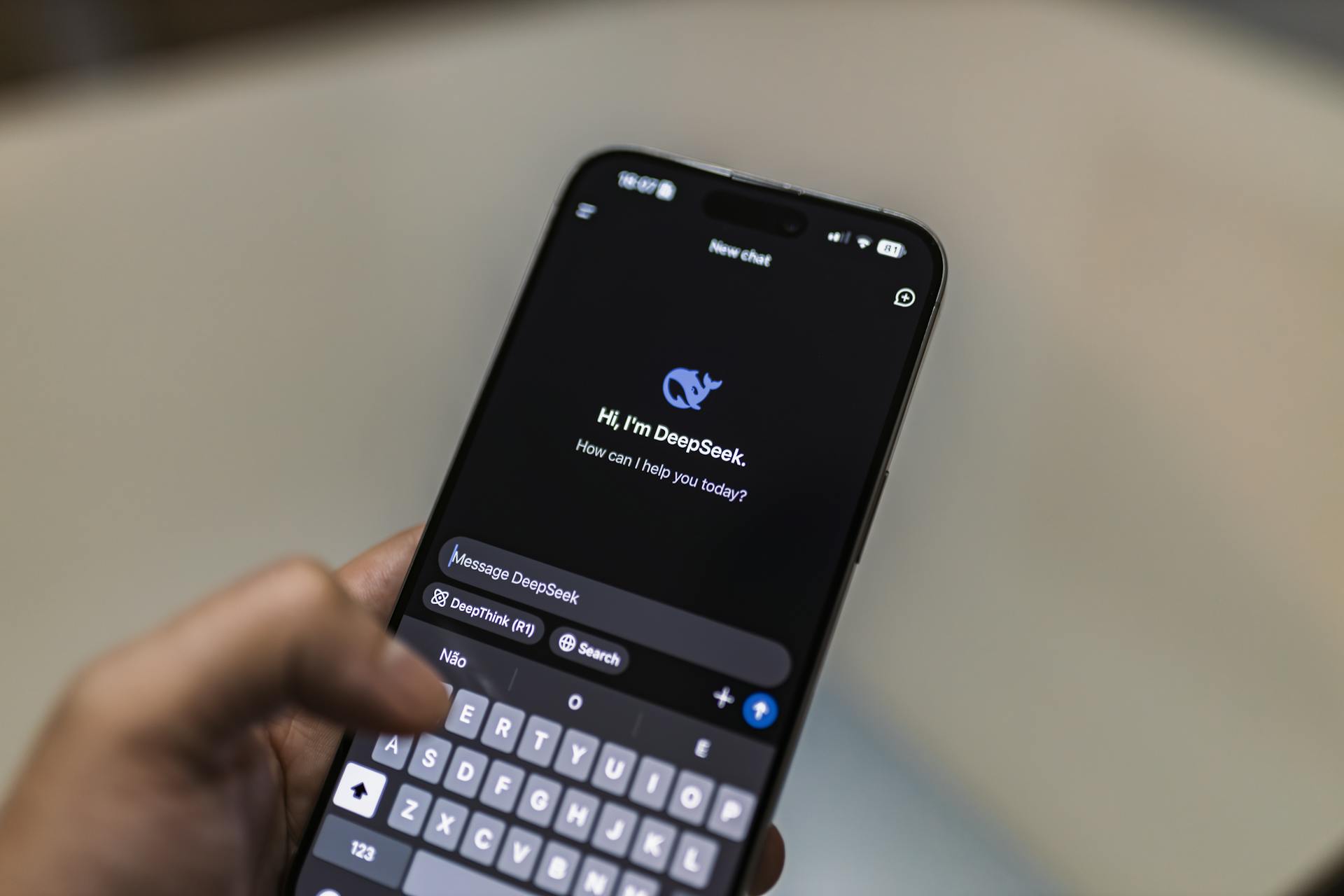

Featured Images: pexels.com